This blog is for Class XI, Computer Science Students, Nepal. The blog is a brief summary of Chapter 5, of the computer science book by Kriti's Publication. Please read the book for details.

1. Introduction

A computer is a man-made machine. Hence, it cannot act independently like humans. We use programs to write instructions for computers to perform various tasks.

So, a program(code) is a method of writing instructions for computers. Programming(coding) is a systematic method of writing programs. A typical computer program is a collection of modules and sub-modules. It can contain multiple files where some files will have codes while others might have data.

A good computer program:

Is clear & simple to read and understand.

Gives the desired result.

Should not be costly to the CPU in terms of memory.

Easier to maintain and update newer versions.

Without any errors.

Find more on the book.

1.1 Introduction To Programming Languages

Just like humans communicate with each other using languages, the computer also needs language to communicate with humans and each other. The languages used to write instructions by humans to communicate with computers are called programming languages.

The computer can only understand a combination of binary numbers i.e. 0 and 1. These numbers are represented in machines as on(1) and off(0) of electric voltage.

Some of the commonly used programming languages are C, C++, C#, PHP, Python, Java, JavaScript, etc. Every programming language should have the following features:

Ability to give and take instructions and data between CPU and input/output devices.

Can perform all arithmetic tasks such as addition, subtraction, etc.

Can perform logical tests such as greater/smaller.

Find more on the book.

1.2 Classification of Programming Languages

Programming languages can be classified into the following types:

Low level

High Level

Fourth Generation(4GL)

1.2.1 Low Level

A low-level language is closer to what a computer can understand. It's considered difficult for programmers to write. They can be further classified into:

Machine Level Language(MLL)

Assembly Level(ALL)

Machine Level Language(MLL)

MLL is the first computer programming language and we still use it. It's the combination of 0's and 1's. It represents the on and off of the electric circuit in the CPU.

It is the most difficult way to write a computer program. It's also machine-dependent, meaning instructions written for, for instance, the Intel processors will not work for the AMD processors.

Why do we use it then if it's such as hassle?

It's faster than any other programming language.

MIL does not require extra translation from interpreters while many other programming languages need to be translated into MIL.

Assembly Level Language(ALL)

It's also a low-level language like MLL, created to overcome so difficulties of MLL. It is a little easier for programmers to write than MLL, and being a low-level it executes very fast.

But unlike in MLL, the instruction is given in mnemonics(symbolic) instead of binaries. ALL is also a machine-dependent language.

Since computers can only understand binary instruction, ALL needs to be translated to MLL. This translation is done by an Assembler.

High Level Language(HLL)

Third Generation Language(3GL)

The 3GLs are easy to write and debug. Since they are written in English they are translated into MLL using an interpreter or an assembler.

Unlike low-level languages, high-level languages are machine-independent languages i.e they'll work regardless of the type of a processor.

The programs written in any HLL is called source program. The code after conversion by the compiler or interpreter is called an object program.

FORTRAN (Formula Translation), introduced in 1956 A.D., is the first High-Level language. Other 3GLs are PASCAL, C, C++, etc.

Some of the advantages of High-Level Languages are:

They are easier to write and debug than low-level ones.

They are machine-independent.

Since written in English, the code itself acts as documentation.

Fourth Generation Language(4GL)

The 4GL was developed after the 3GL and it is a result-oriented programming language. They also contain database query language. Structured Query Language(SQL), Perl, Python, Ruby, etc are fourth-generation languages. They are also machine-independent and need an interpreter for a translation.

Fifth Generation Language(5GL)

Fifth-generation languages are still in the development process. These are the programming languages that have visual tools to develop a program, including Artificial Intelligence, and natural language such as English. Examples of fifth-generation languages include LISP and PROLOG.

Compiler, Interpreter, and Assembler

These programs act as intermediaries between source and object programs. They help HLL to translate to low-level language and vice-versa.

Compiler

The compiler converts HLL to low-level at once. It read the complete program and if it's a bug/error free then it converts the HLL. The process of conversion of HLL to low-level language by compiler compiling.

Interpreter

The interpreter function same as the compiler however it converts source code to object code line by line instead of all at once. Since the interpreter reads and translates per line it's slower in comparison to the compiler.

Assembler

An assembler translates assembly-level languages into MLL. Similar to the interpreter, the assembler also translates the code line by line.

List of High-Level Languages

Some of the programming languages listed in the book are discussed briefly below. If you want to find more please read the book.

1. FORTRAN

Formula Translator is an HLL developed in 1956 by John Backus. It's a powerful language for engineering and scientific calculations. Check out how FORAN is used even now after 70+ years. Another article on the benefits of FORTRAN.

2. LISP

LISP was developed in 1958 at IBM lab. It's a language for list processing. It is used in AI and pattern recognition.

3. PASCAL

PASCAL is a structured programming language developed by Niklaus Wirth in Switzerland in the 1970s. It is named in honor of the French mathematician, philosopher, and physicist Blaise Pascal.

4. C

C is a procedural language developed by Dennis Ritchie in 1973 AD. UNIX operating system was developed using C. It gained popularity due to its speed and portability. It is HLL with low-level register manipulation. C is also known as the mother language since many programming languages like C++, Java, etc are based on C.

5. Java

Java is an object-oriented programming language developed by James Gosling and others from Sun Microsystems, USA, in 1994. It is a simple yet powerful language. Java is used in Web and Mobile-based applications.

6. .Net

Pronounced as dot net, .Net is Microsoft's common platform which combines different programming languages into one. It includes C, C++, Visual Basic.Net, C#(C sharp), J#(J sharp), etc. It is also used for the development of web and mobile-based applications.

7. JavaScript

JavaScript is a scripting language developed in 1995. It is used to embed procedural code into web pages. It's embedded within HTML. It can run in a browser and has a similar syntax to Java or C.

8. PHP

PHP(Hypertext Preprocessor) is a very popular open-source server-side scripting language. It was developed in 1995 by Rasmus Lerdorf. WordPress a popular CMS platform is built using PHP. It is popular for accessing databases like MySQL and Oracle.

9. Python

Python is a simple, interpreted, and general-purpose programming language. Python is also an open-source programming language. It supports multiple paradigms and used several platforms and purposes like Scripting, Hacking, Web Development, Data Analysis, Machine Learning, etc.

Bug

A bug is an error, mistake, or fault in a program that may lead to an unexpected result. Most bugs are due to human errors in source code. Program Debugging is the process of discovery and correction of errors in the source code. Depending on the size and number of errors debugging can take from a few seconds to months.

There are three types of errors. They are.

Syntax error

Semantic error

Runtime Error

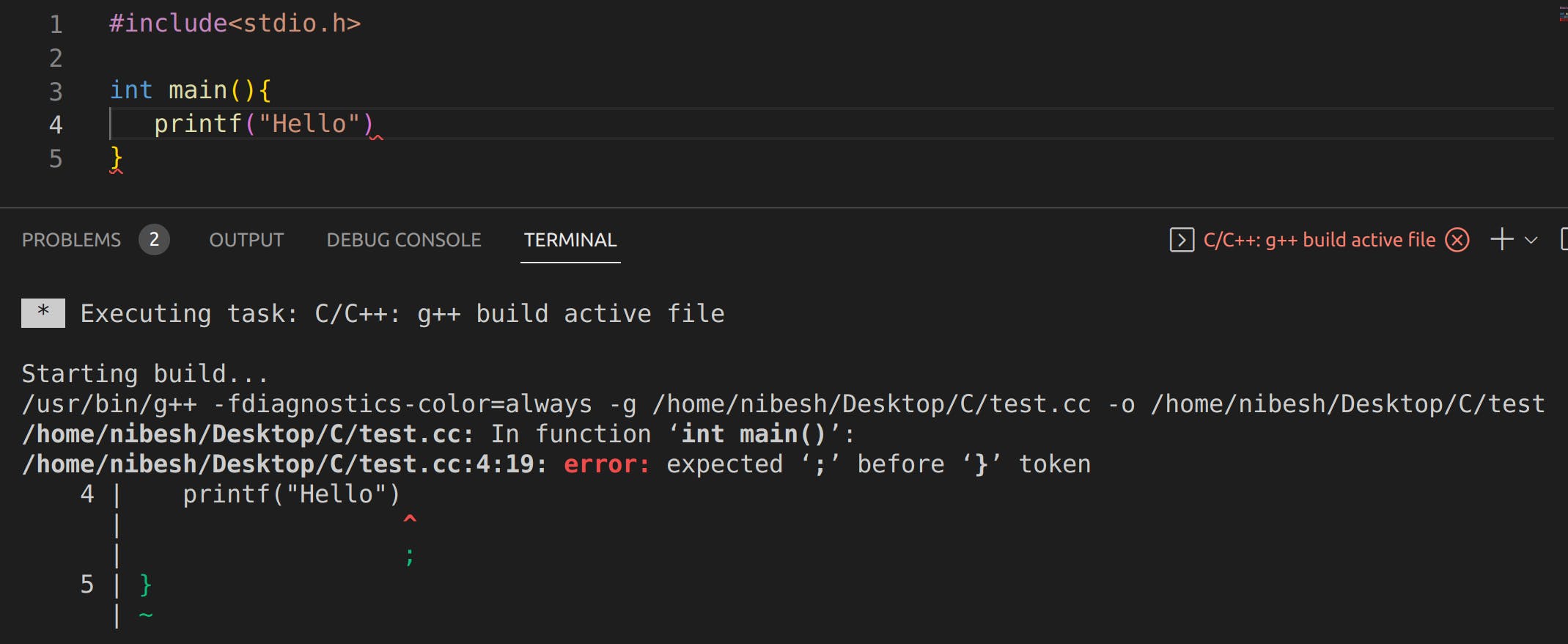

Syntax Error

In programming, the syntax is a combination of keywords, variables, operands, and operators. Just as how we write sentences in English there are a set of rules on how to write syntax in programming languages(each programming languages have its own rule). If these rules are not followed then we get a syntax error.

The following image is an example is one of many cases of syntax error. It is a piece of code written in C programming. The terminal is indicating a syntax error. The semicolon ";" is missing in the code after the printf method.

Semantic Error

Semantic Error, sometimes also referred to as logical error(although some coders debate), produces an unintended or undesired output or other behavior, although it may not §immediately be recognized as such.

When the syntax of your code is correct but the output is inaccurate then it is a logical error. The computer will run the code normally because it's not the error on syntax.

Copy and run the code below. It calculates the percentage from random hard-coded values. Can you spot the error in this code?

#include <stdio.h>

int calculatePercentage()

{

float totalMarks = 599;

float totalFullMarks = 1000;

// spot the logical error

float percentage = (totalMarks / totalFullMarks) * 10;

printf("%f", percentage);

return percentage;

}

Logical Error Vs Accurate

// this is error

float percentage = (totalMarks / totalFullMarks) * 10;

// this is correct code

float percentage = (totalMarks / totalFullMarks) * 100;

The formula given to calculate the percentage was wrong. But unless the coder runs the test and checks the value, the error won't be spotted.

Run-Time Error

Run time error occurs while execution(running time) of code. It can occur for instance when we divide a number by zero whose value is an infinity that results in an error. Other instances of run-time error are infinite loops, memory leaks, invalid functions, etc. The run-time error causes the termination of program execution.

Control Structures

Control Structures are just a way to specify the flow of control in programs. A program can be clear, by the use of self-contained modules called control structures. Each programming language has the following control structures:

Sequence

Selection

Iteration

Sequence

In sequence control structure programs are executed in a sequence, from start to end without leaving/repeating any lines of code and without any condition.

Selection

Selection is a structure where a program within a block is executed only upon the satisfaction of the specific condition. If/Else statements are examples of selection structure.

Iteration

Sometimes in a program, we need to repeat some lines of code multiple times. That is when iteration control structures are used. For, While, and Do While are examples of iteration control structures.

Program Design Tools

To make a successful computer program, a program first needs to be well designed. The design decides the structure, goals, looks, functionality, packages, and resources required for the program. If the design is not good, the program will also be not good.

Some tools that are used to design programs are:

Algorithm

Pseudocode

Data Flow Diagram

Decision Tree

Flowchart

Context Diagram

ER Diagram

Decision Tree

Algorithms

An algorithm is a set of finite steps to define the solution to a particular problem. It is written in pseudocode(plain English) instead of a programming language. It's programming language independent. An algorithm should be precise and clear. Some features of algorithms are:

It starts with "Start" and ends with "End/Stop".

It has a finite number of steps.

Should have input and give an output.

It should not be restricted to any programming language.

Check out the book for an algorithm to Define Simple Intrest.

FlowCharts

Flow charts are graphical representations of a program. They help coders determine the logical flow of a program and identify the sequence of steps needed to achieve the desired output.

Flowchart's graphics make it free of logical error. They also help to modify programs in the future. However, flowcharts are time-consuming to draw. The branches and loops make them very complicated. Check out the book for details on different symbols like Rectangles and Circles and their meaning in flowcharts.

FlowCharts can be classified into System Flowcharts and Program Flowcharts.

A system flowchart is a type of flowchart that explains the functionality of an entire system. In contrast, a program flowchart is a type of flowchart that explains how a particular program solves a given task. A system flowchart does not focus on solving problems but explains business problems, on the contrary, a program flowchart focuses on solving a problem.

Pseudocode

The word "Pseudo" means false/fake. Pseudocode means false or fake code. It's written in plain English. Before we write code we can write steps in plain English often written as comments in a program. A rough example is given below.

/*Pseudo code for writing a program to calculate percentage of a student*/

// 1. Input total marks from the student

// 2. Input grand total of full marks

// 3. Compute percentage with formula : (totalMakrs/grandTotal)*100

// 4. Print the percentage

// 5. Return the percentage.

Absolute Binary, BCD, ASCII, and Unicode

Absolute Bits

In mathematical calculations, we use "+" and "-" symbols to represent positive and negative values respectively. In computer systems, there are no "+" or "-" but just values represented in their binary forms(0 & 1). Hence, to resolve the confusion of positive and negative the initial(first) number in a binary number represents a sign. The 0 is used as positive and 1 is used as negative. Numbers are also represented in bit formats such as 8,16,32, and 64 bits. For instance, the value of +25 and -25 in binary are 00011001 and 10011001 respectively.

Binary Coded Decimal(BCD)

BCD is a simple system to convert decimal numbers to binary numbers. Here, each number is converted separately not combined. Also, the converted binary numbers should have 4 digits, if there aren't four digits already, 0 is added to the front of the binary.

Using this conversion, the number 25, for example, would have a BCD number of 2 = 0010(instead of 10) and 5 = 0101 or 00100101. However, in binary, 25 is represented as 11001.

American Standard Code for Information Interchange (ASCII)

It's a standard coding system that assigns numeric values to letters, numbers, punctuation marks, and control characters. It was created in 1968, to achieve compatibility across various computers and peripherals.

ASCII has two sets: Standard ASCII(for 7 bits, 128 characters) and Extended ASCII(for 8 bits, 256 bits). In ASCII each value is represented by an integer from 0 to 127 for Standard ASCII and 0 to 255 for Extended ASCII. Check out these tables for values and their corresponding numbers.

Unicode

ASCII is sufficient for English but not for other literature like Chinese, Japanese, etc. Thus, Unicode was developed. This 16-bit character code defines 65,536 different characters unlike the 256 characters defined by ASCII. Each character in Unicode occupies 2 bytes of storage space.

Conclusion

This is only Part-I of chapter 5 of the book. Please subscribe and wait for the next part where we'll talk about C programming language. Find out more blogs in this series here.

Thanks for reading. It is Nibesh Khadka from Khadka's Coding Lounge. Find more of my blogs @ kcl.hashnode.dev